使用 Optuna 调优模型#

在本笔记本中,我们将展示如何使用 Optuna 来调优 GluonTS 模型的超参数。本例中,我们将调优一个基于 PyTorch 的 DeepAREstimator。

注意: 为了缩短本例的运行时间,我们使用了一个小规模数据集,并在非常少的调优轮次(“试验”)中只调优了两个超参数。在实际应用中,特别是在大型数据集上,您可能需要增加搜索空间并增加试验次数。

数据加载和处理#

[1]:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import json

from gluonts.dataset.repository import get_dataset

from gluonts.dataset.util import to_pandas

[2]:

dataset = get_dataset("m4_hourly")

提取并分割训练集和测试集#

通常,GluonTS 提供的数据集包含三个部分:

dataset.train是用于训练的数据条目集合,每个条目对应一个时间序列。dataset.test是用于推理的数据条目集合。测试集是训练集的扩展版本,包含每个时间序列末尾在训练期间未见过的窗口。该窗口的长度等于建议的预测长度。dataset.metadata包含数据集的元数据,例如时间序列的频率、建议的预测范围、相关特征等。

我们可以查看 dataset.metadata 的详细信息。

[3]:

print(f"Recommended prediction horizon: {dataset.metadata.prediction_length}")

print(f"Frequency of the time series: {dataset.metadata.freq}")

Recommended prediction horizon: 48

Frequency of the time series: H

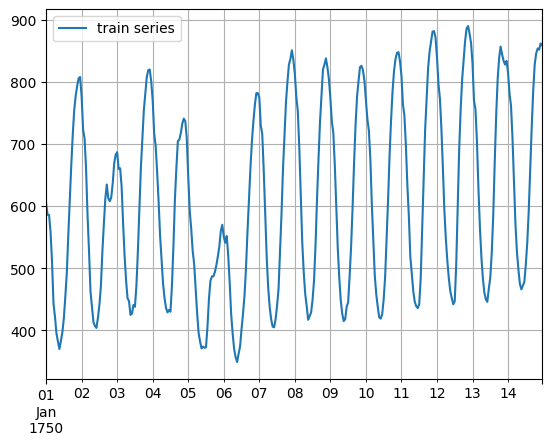

这是数据看起来的样子(第一个训练序列,前两周数据)

[4]:

to_pandas(next(iter(dataset.train)))[: 14 * 24].plot()

plt.grid(which="both")

plt.legend(["train series"], loc="upper left")

plt.show()

调优 DeepAR 估计器的参数#

[5]:

import optuna

import torch

from gluonts.dataset.split import split

from gluonts.evaluation import Evaluator

from gluonts.torch.model.deepar import DeepAREstimator

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

现在我们将使用 Optuna 在训练数据上调优 DeepAR 估计器。我们选择 num_layers 和 hidden_size 这两个超参数进行优化。

首先,我们定义一个 dataentry_to_dataframe 方法将 DataEntry 转换为 pandas.DataFrame。其次,我们定义一个 DeepARTuningObjective 类用于 Optuna 的调优过程。该类可以使用数据集、预测长度和数据频率以及用于评估模型的指标进行配置。在 __init__ 方法中,我们初始化目标并使用我们 GluonTS 项目中已有的 split 方法分割数据集。 - validation_input:用于验证的输入部分 - validation_label:用于验证的标签部分 在 get_params 方法中,我们定义了要在给定范围内调优哪些超参数。在 __call__ 方法中,我们定义了 DeepAREstimator 在训练和验证中使用的方式。

[6]:

def dataentry_to_dataframe(entry):

df = pd.DataFrame(

entry["target"],

columns=[entry.get("item_id")],

index=pd.period_range(

start=entry["start"], periods=len(entry["target"]), freq=entry["start"].freq

),

)

return df

class DeepARTuningObjective:

def __init__(

self, dataset, prediction_length, freq, metric_type="mean_wQuantileLoss"

):

self.dataset = dataset

self.prediction_length = prediction_length

self.freq = freq

self.metric_type = metric_type

self.train, test_template = split(dataset, offset=-self.prediction_length)

validation = test_template.generate_instances(

prediction_length=prediction_length

)

self.validation_input = [entry[0] for entry in validation]

self.validation_label = [

dataentry_to_dataframe(entry[1]) for entry in validation

]

def get_params(self, trial) -> dict:

return {

"num_layers": trial.suggest_int("num_layers", 1, 5),

"hidden_size": trial.suggest_int("hidden_size", 10, 50),

}

def __call__(self, trial):

params = self.get_params(trial)

estimator = DeepAREstimator(

num_layers=params["num_layers"],

hidden_size=params["hidden_size"],

prediction_length=self.prediction_length,

freq=self.freq,

trainer_kwargs={

"enable_progress_bar": False,

"enable_model_summary": False,

"max_epochs": 10,

},

)

predictor = estimator.train(self.train, cache_data=True)

forecast_it = predictor.predict(self.validation_input)

forecasts = list(forecast_it)

evaluator = Evaluator(quantiles=[0.1, 0.5, 0.9])

agg_metrics, item_metrics = evaluator(

self.validation_label, forecasts, num_series=len(self.dataset)

)

return agg_metrics[self.metric_type]

现在我们可以调用 Optuna 的调优过程。

[7]:

import time

start_time = time.time()

study = optuna.create_study(direction="minimize")

study.optimize(

DeepARTuningObjective(

dataset.train, dataset.metadata.prediction_length, dataset.metadata.freq

),

n_trials=5,

)

print("Number of finished trials: {}".format(len(study.trials)))

print("Best trial:")

trial = study.best_trial

print(" Value: {}".format(trial.value))

print(" Params: ")

for key, value in trial.params.items():

print(" {}: {}".format(key, value))

print(time.time() - start_time)

[I 2024-05-31 18:25:30,746] A new study created in memory with name: no-name-33a5f099-a6bc-49b7-ae60-e8db6064e006

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/connectors/logger_connector/logger_connector.py:67: Starting from v1.9.0, `tensorboardX` has been removed as a dependency of the `lightning.pytorch` package, due to potential conflicts with other packages in the ML ecosystem. For this reason, `logger=True` will use `CSVLogger` as the default logger, unless the `tensorboard` or `tensorboardX` packages are found. Please `pip install lightning[extra]` or one of them to enable TensorBoard support by default

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.97729 (best 5.97729), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.97729 (best 5.97729), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.08986 (best 5.08986), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.08986 (best 5.08986), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' reached 4.82337 (best 4.82337), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' reached 4.82337 (best 4.82337), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.66130 (best 4.66130), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.66130 (best 4.66130), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' reached 4.65298 (best 4.65298), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' reached 4.65298 (best 4.65298), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.45146 (best 4.45146), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.45146 (best 4.45146), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' reached 4.43675 (best 4.43675), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' reached 4.43675 (best 4.43675), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO: Epoch 7, global step 400: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' was not in top 1

INFO: Epoch 8, global step 450: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' was not in top 1

INFO: Epoch 9, global step 500: 'train_loss' reached 4.42556 (best 4.42556), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' reached 4.42556 (best 4.42556), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_1/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

Running evaluation: 100%|██████████| 414/414 [00:00<00:00, 8080.46it/s]

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

[I 2024-05-31 18:25:46,776] Trial 0 finished with value: 0.12650161257328987 and parameters: {'num_layers': 2, 'hidden_size': 23}. Best is trial 0 with value: 0.12650161257328987.

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/torch/nn/modules/rnn.py:83: UserWarning: dropout option adds dropout after all but last recurrent layer, so non-zero dropout expects num_layers greater than 1, but got dropout=0.1 and num_layers=1

warnings.warn("dropout option adds dropout after all but last "

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.95037 (best 5.95037), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.95037 (best 5.95037), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.34065 (best 5.34065), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.34065 (best 5.34065), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' reached 4.51309 (best 4.51309), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' reached 4.51309 (best 4.51309), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.33170 (best 4.33170), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.33170 (best 4.33170), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' was not in top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.28196 (best 4.28196), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.28196 (best 4.28196), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' reached 4.17429 (best 4.17429), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' reached 4.17429 (best 4.17429), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO: Epoch 7, global step 400: 'train_loss' reached 4.03278 (best 4.03278), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' reached 4.03278 (best 4.03278), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_2/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO: Epoch 8, global step 450: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' was not in top 1

INFO: Epoch 9, global step 500: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' was not in top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/torch/nn/modules/rnn.py:83: UserWarning: dropout option adds dropout after all but last recurrent layer, so non-zero dropout expects num_layers greater than 1, but got dropout=0.1 and num_layers=1

warnings.warn("dropout option adds dropout after all but last "

Running evaluation: 100%|██████████| 414/414 [00:00<00:00, 7625.91it/s]

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

[I 2024-05-31 18:25:56,608] Trial 1 finished with value: 0.08251994522973598 and parameters: {'num_layers': 1, 'hidden_size': 20}. Best is trial 1 with value: 0.08251994522973598.

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.85761 (best 5.85761), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.85761 (best 5.85761), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.25142 (best 5.25142), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.25142 (best 5.25142), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' reached 5.13679 (best 5.13679), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' reached 5.13679 (best 5.13679), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.94917 (best 4.94917), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.94917 (best 4.94917), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' reached 4.53793 (best 4.53793), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' reached 4.53793 (best 4.53793), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.51407 (best 4.51407), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.51407 (best 4.51407), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' was not in top 1

INFO: Epoch 7, global step 400: 'train_loss' reached 4.43576 (best 4.43576), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' reached 4.43576 (best 4.43576), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO: Epoch 8, global step 450: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' was not in top 1

INFO: Epoch 9, global step 500: 'train_loss' reached 4.08463 (best 4.08463), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' reached 4.08463 (best 4.08463), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_3/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

Running evaluation: 100%|██████████| 414/414 [00:00<00:00, 7966.97it/s]

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

[I 2024-05-31 18:26:09,527] Trial 2 finished with value: 0.11560589914025643 and parameters: {'num_layers': 3, 'hidden_size': 18}. Best is trial 1 with value: 0.08251994522973598.

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.95401 (best 5.95401), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.95401 (best 5.95401), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.16907 (best 5.16907), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.16907 (best 5.16907), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' was not in top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.60087 (best 4.60087), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.60087 (best 4.60087), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' reached 4.36051 (best 4.36051), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' reached 4.36051 (best 4.36051), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.25172 (best 4.25172), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.25172 (best 4.25172), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' was not in top 1

INFO: Epoch 7, global step 400: 'train_loss' reached 4.12659 (best 4.12659), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' reached 4.12659 (best 4.12659), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO: Epoch 8, global step 450: 'train_loss' reached 4.12235 (best 4.12235), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=8-step=450.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' reached 4.12235 (best 4.12235), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_4/checkpoints/epoch=8-step=450.ckpt' as top 1

INFO: Epoch 9, global step 500: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' was not in top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

Running evaluation: 100%|██████████| 414/414 [00:00<00:00, 7639.46it/s]

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

[I 2024-05-31 18:26:36,714] Trial 3 finished with value: 0.06051118673905025 and parameters: {'num_layers': 4, 'hidden_size': 46}. Best is trial 3 with value: 0.06051118673905025.

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.75196 (best 5.75196), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.75196 (best 5.75196), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.26680 (best 5.26680), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.26680 (best 5.26680), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' reached 5.14737 (best 5.14737), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' reached 5.14737 (best 5.14737), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.99823 (best 4.99823), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.99823 (best 4.99823), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' reached 4.85785 (best 4.85785), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' reached 4.85785 (best 4.85785), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.74995 (best 4.74995), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.74995 (best 4.74995), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' reached 4.57844 (best 4.57844), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' reached 4.57844 (best 4.57844), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=6-step=350.ckpt' as top 1

INFO: Epoch 7, global step 400: 'train_loss' reached 4.34586 (best 4.34586), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' reached 4.34586 (best 4.34586), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO: Epoch 8, global step 450: 'train_loss' reached 4.28116 (best 4.28116), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=8-step=450.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' reached 4.28116 (best 4.28116), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=8-step=450.ckpt' as top 1

INFO: Epoch 9, global step 500: 'train_loss' reached 4.26179 (best 4.26179), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' reached 4.26179 (best 4.26179), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_5/checkpoints/epoch=9-step=500.ckpt' as top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

Running evaluation: 100%|██████████| 414/414 [00:00<00:00, 7385.91it/s]

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/pandas/core/dtypes/astype.py:138: UserWarning: Warning: converting a masked element to nan.

return arr.astype(dtype, copy=True)

[I 2024-05-31 18:26:51,459] Trial 4 finished with value: 0.08992057277146621 and parameters: {'num_layers': 4, 'hidden_size': 18}. Best is trial 3 with value: 0.06051118673905025.

Number of finished trials: 5

Best trial:

Value: 0.06051118673905025

Params:

num_layers: 4

hidden_size: 46

80.71679067611694

重新训练模型#

通过 optuna 获得最佳超参数后,您可以将其设置到 DeepAR 估计器中,以便在我们此处考虑的整个训练子集上重新训练模型。

[8]:

estimator = DeepAREstimator(

num_layers=trial.params["num_layers"],

hidden_size=trial.params["hidden_size"],

prediction_length=dataset.metadata.prediction_length,

context_length=100,

freq=dataset.metadata.freq,

trainer_kwargs={

"enable_progress_bar": False,

"enable_model_summary": False,

"max_epochs": 10,

},

)

在为估计器指定所有必要的超参数后,我们可以使用训练数据集 train_subset 通过调用估计器的 train 方法来训练它。训练算法返回一个拟合好的模型(在 GluonTS 中称为 Predictor),可用于获取预测。

[9]:

predictor = estimator.train(dataset.train, cache_data=True)

INFO: GPU available: False, used: False

INFO:lightning.pytorch.utilities.rank_zero:GPU available: False, used: False

INFO: TPU available: False, using: 0 TPU cores

INFO:lightning.pytorch.utilities.rank_zero:TPU available: False, using: 0 TPU cores

INFO: IPU available: False, using: 0 IPUs

INFO:lightning.pytorch.utilities.rank_zero:IPU available: False, using: 0 IPUs

INFO: HPU available: False, using: 0 HPUs

INFO:lightning.pytorch.utilities.rank_zero:HPU available: False, using: 0 HPUs

/opt/hostedtoolcache/Python/3.8.18/x64/lib/python3.8/site-packages/lightning/pytorch/trainer/configuration_validator.py:74: You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.

INFO: Epoch 0, global step 50: 'train_loss' reached 5.43426 (best 5.43426), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 0, global step 50: 'train_loss' reached 5.43426 (best 5.43426), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=0-step=50.ckpt' as top 1

INFO: Epoch 1, global step 100: 'train_loss' reached 5.19284 (best 5.19284), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 1, global step 100: 'train_loss' reached 5.19284 (best 5.19284), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=1-step=100.ckpt' as top 1

INFO: Epoch 2, global step 150: 'train_loss' reached 5.03852 (best 5.03852), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 2, global step 150: 'train_loss' reached 5.03852 (best 5.03852), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=2-step=150.ckpt' as top 1

INFO: Epoch 3, global step 200: 'train_loss' reached 4.74886 (best 4.74886), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 3, global step 200: 'train_loss' reached 4.74886 (best 4.74886), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=3-step=200.ckpt' as top 1

INFO: Epoch 4, global step 250: 'train_loss' reached 4.52917 (best 4.52917), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 4, global step 250: 'train_loss' reached 4.52917 (best 4.52917), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=4-step=250.ckpt' as top 1

INFO: Epoch 5, global step 300: 'train_loss' reached 4.33903 (best 4.33903), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 5, global step 300: 'train_loss' reached 4.33903 (best 4.33903), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=5-step=300.ckpt' as top 1

INFO: Epoch 6, global step 350: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 6, global step 350: 'train_loss' was not in top 1

INFO: Epoch 7, global step 400: 'train_loss' reached 3.88879 (best 3.88879), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 7, global step 400: 'train_loss' reached 3.88879 (best 3.88879), saving model to '/home/runner/work/gluonts/gluonts/lightning_logs/version_6/checkpoints/epoch=7-step=400.ckpt' as top 1

INFO: Epoch 8, global step 450: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 8, global step 450: 'train_loss' was not in top 1

INFO: Epoch 9, global step 500: 'train_loss' was not in top 1

INFO:lightning.pytorch.utilities.rank_zero:Epoch 9, global step 500: 'train_loss' was not in top 1

INFO: `Trainer.fit` stopped: `max_epochs=10` reached.

INFO:lightning.pytorch.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=10` reached.

可视化和评估预测#

有了预测器,我们现在可以预测测试数据集的最后一个窗口并评估模型的性能。

GluonTS 提供了 make_evaluation_predictions 函数,该函数可以自动化预测和模型评估的过程。该函数大致执行以下步骤:

移除测试数据集中我们希望预测的、长度为

prediction_length的最后窗口。估计器使用剩余的数据来预测(以样本路径的形式)刚刚移除的“未来”窗口。

预测结果与相同时间范围内的真实值一起返回(作为 python 生成器对象)。

[10]:

from gluonts.evaluation import make_evaluation_predictions

forecast_it, ts_it = make_evaluation_predictions(

dataset=dataset.test,

predictor=predictor,

)

首先,我们可以将这些生成器转换为列表,以方便后续计算。

[11]:

forecasts = list(forecast_it)

tss = list(ts_it)

Forecast 对象有一个 plot 方法,可以将预测路径汇总为均值、预测区间等。预测区间以不同的颜色阴影表示为“扇形图”。

[12]:

plt.plot(tss[0][-150:].to_timestamp())

forecasts[0].plot(show_label=True)

plt.legend()

[12]:

<matplotlib.legend.Legend at 0x7f6eeaf53040>

我们还可以从数值上评估预测的质量。在 GluonTS 中,Evaluator 类可以计算总体性能指标以及每个时间序列的指标(这对于分析异构时间序列的性能很有用)。

[13]:

from gluonts.evaluation import Evaluator

[14]:

evaluator = Evaluator(quantiles=[0.1, 0.5, 0.9])

agg_metrics, item_metrics = evaluator(tss, forecasts)

Running evaluation: 414it [00:00, 7500.25it/s]

总体指标是跨时间和跨时间序列聚合的。

[15]:

print(json.dumps(agg_metrics, indent=4))

{

"MSE": 2904649.6919837557,

"abs_error": 7130449.606354713,

"abs_target_sum": 145558863.59960938,

"abs_target_mean": 7324.822041043146,

"seasonal_error": 336.9046924038305,

"MASE": 2.7545853877072,

"MAPE": 0.22468119902887207,

"sMAPE": 0.16241664783474233,

"MSIS": 28.85744362749451,

"num_masked_target_values": 0.0,

"QuantileLoss[0.1]": 3311120.5242288597,

"Coverage[0.1]": 0.07830112721417071,

"QuantileLoss[0.5]": 7130449.615060806,

"Coverage[0.5]": 0.40247584541062803,

"QuantileLoss[0.9]": 3864508.7020874014,

"Coverage[0.9]": 0.8110909822866345,

"RMSE": 1704.3032863853064,

"NRMSE": 0.23267504341205705,

"ND": 0.04898670840113545,

"wQuantileLoss[0.1]": 0.022747639287270072,

"wQuantileLoss[0.5]": 0.048986708460946944,

"wQuantileLoss[0.9]": 0.026549456395301044,

"mean_absolute_QuantileLoss": 4768692.947125689,

"mean_wQuantileLoss": 0.032761268047839354,

"MAE_Coverage": 0.37036366076221156,

"OWA": NaN

}

个体指标仅跨时间步长聚合。

[16]:

item_metrics.head()

[16]:

| item_id | forecast_start | MSE | abs_error | abs_target_sum | abs_target_mean | seasonal_error | MASE | MAPE | sMAPE | num_masked_target_values | ND | MSIS | QuantileLoss[0.1] | Coverage[0.1] | QuantileLoss[0.5] | Coverage[0.5] | QuantileLoss[0.9] | Coverage[0.9] | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1750-01-30 04:00 | 1641.944499 | 1555.849609 | 31644.0 | 659.250000 | 42.371302 | 0.764988 | 0.048947 | 0.048178 | 0.0 | 0.049167 | 5.675331 | 668.749005 | 0.104167 | 1555.849579 | 0.666667 | 742.963159 | 1.000000 |

| 1 | 1 | 1750-01-30 04:00 | 167186.770833 | 18859.820312 | 124149.0 | 2586.437500 | 165.107988 | 2.379733 | 0.150390 | 0.139492 | 0.0 | 0.151913 | 13.140830 | 12771.814990 | 0.812500 | 18859.821777 | 1.000000 | 6181.971582 | 1.000000 |

| 2 | 2 | 1750-01-30 04:00 | 65984.177083 | 10269.212891 | 65030.0 | 1354.791667 | 78.889053 | 2.711934 | 0.147278 | 0.162526 | 0.0 | 0.157915 | 41.837951 | 2931.179285 | 0.000000 | 10269.212341 | 0.000000 | 11613.746228 | 0.250000 |

| 3 | 3 | 1750-01-30 04:00 | 199187.416667 | 16794.406250 | 235783.0 | 4912.145833 | 258.982249 | 1.350994 | 0.070886 | 0.070058 | 0.0 | 0.071228 | 8.733110 | 9183.591992 | 0.166667 | 16794.407471 | 0.437500 | 6128.578857 | 0.750000 |

| 4 | 4 | 1750-01-30 04:00 | 89862.916667 | 11872.976562 | 131088.0 | 2731.000000 | 200.494083 | 1.233721 | 0.090101 | 0.095563 | 0.0 | 0.090573 | 8.756501 | 4312.656763 | 0.020833 | 11872.977051 | 0.187500 | 8194.775342 | 0.520833 |

[17]:

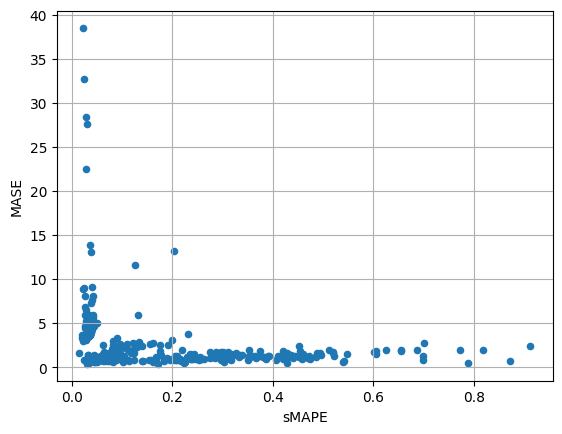

item_metrics.plot(x="sMAPE", y="MASE", kind="scatter")

plt.grid(which="both")

plt.show()